November 12, 2010

Religion as a spandrel

Posted in Language and Myth tagged anthropomorphism, cognitive history, myth, religion, social intelligence, spandrel at 11:20 pm by Jeremy

This section of my chapter, “The Rise of Mythic Consciousness”, examines the emerging view held by cognitive anthropologists of religion as a “spandrel” or a superfluous by-product of the structure of the human mind. While this view yields some compelling results, I suggest that in fact religion, as a product of mythic consciousness, is a natural and inevitable result of the workings of the prefrontal cortex. The chapter is taken from the book I’m writing entitled Finding the Li: Towards a Democracy of Consciousness.

Religion as a spandrel

Evolutionary biologists Stephen Jay Gould and Richard Lewontin once kicked off a deservedly famous paper by describing the great central dome of St. Mark’s Cathedral in Venice. There are beautiful mosaics covering not just the central circles of the dome but also the arches holding it up, along with the triangular spaces formed where two arches meet each other at right angles. These spaces are called spandrels, and Gould and Lewontin used them to illustrate a hugely influential evolutionary theory. A spandrel doesn’t, by itself, serve any purpose. It simply exists as an architectural by-product of the arches which of course serve a crucial purpose, holding up the dome. But if someone looked at the beautifully decorated spandrels without knowing anything about architecture, they would see them as an integral part of the architectural design. The living world, Gould and Lewontin argued, is full of evolutionary spandrels, features or functions that seem to have evolved for a specific purpose but which, on closer evaluation, turn out to have been a superfluous by-product of something else.[1]

For some cognitive anthropologists, religion is a spandrel. To understand how religion evolved, they believe that you need to look, not just at religion itself, but at some of the key cognitive functions of the modern human mind, and what you find is that religion developed as a by-product or side-effect of those functions. “Religion ensues,” writes Scott Atran, “from the ordinary workings of the human mind as it deals with emotionally compelling problems of human existence, such as birth, aging, death, unforeseen calamities, and love.”[2] It’s a “converging by-product of several cognitive and emotional mechanisms that evolved for mundane adaptive tasks.”[3]

These cognitive mechanisms are ones that we’re already familiar with from what we know about the workings of the pfc. They include the all-important theory of mind, along with our ability for thinking about people even though they’re distant from us in space and time, known as “displacement,” as well as our power to hold “counterfactuals” in our mind: things that we can consider even though we know them not to be true, such as “if Kennedy hadn’t been assassinated…”[4] Without attempting a complete review of how these cognitive mechanisms engendered religion, we’ll examine some of the more important examples to get an idea for how the process is seen to work.

One of the most widespread aspects of religious thought worldwide and throughout history is the belief that a spirit exists separately from a body. In order to see how this might relate to the pfc, consider the underlying process that leads to our capability for displacement. As young infants, we quickly learn that people can apparently disappear and then reappear, sometimes minutes, hours or even days later. A realization that consequently occurs to us is the continued existence of that person even while she has disappeared. This continued existence of someone who’s left our immediate vicinity soon becomes an essential ingredient of our social intelligence, allowing us to think, for example, about what the other person would feel or think if they were there. It is a relatively simple step for the same practice of displacement to apply to the thoughts and feelings of a dead person. In one study showing our “natural disposition toward afterlife beliefs,” some kindergarten-age children were presented with a puppet show, where an anthropomorphized mouse was killed and eaten by an alligator. When the children were asked about the biological aspects of the dead mouse, such as whether he still needed to eat or relieve himself, they were clear that this was no longer the case. Yet when they were asked whether the dead mouse was still thinking or feeling, most children answered yes. These beliefs could not be attributed to cultural indoctrination, because when older children were asked the same questions, they were less likely to attribute continued thoughts and feelings to the dead mouse. These results led the researchers to suggest that the belief that a dead person still exists in some form may actually be our “default cognitive stance,” part of our “intuitive pattern of reasoning.”[5]

This makes even more sense when we remember, as noted in Chapter 2, that the same part of the pfc – the medial prefrontal cortex – is activated when we think about others and when we exercise self-awareness to think about ourselves.[6] As a result of our self-awareness, we tend to “feel our ‘self’ to be the owner of the body, but we are not the same as our bodies.”[7]* It doesn’t take too much of a mental leap to view others in the same way, and therefore assume that when their bodies die, their “selves” continue to exist, especially since we can still think about them, talk about them, and imagine what they would be feeling about something. As one researcher puts it, “social-intelligence systems do not ‘shut off’ with death; indeed most people still have thoughts and feelings about the recently dead.”[8] Given our social intelligence as the source of our unique cognition, it’s much easier for our minds to think of someone still existing but not being there in person, than it is to conceive of them ceasing to exist altogether.

Besides naturally believing in spirits, little children also intuitively believe that everything exists for a purpose, a viewpoint known as teleology, and one that is inextricably intertwined with religious thought. Psychologist Deborah Kelemen has conducted a number of studies of children’s beliefs with some intriguing results. When American 7- and 8-year olds were asked why prehistoric rocks were pointy, they rejected physical explanations like “bits of stuff piled up for a long period of time” for teleological explanations such as “so that animals wouldn’t sit on them and smash them” or “so that animals could scratch on them when they got itchy.” Similarly, the children explained that “clouds are for raining” and rejected more physical reasons even when told that adults explained them this way. Similar results were found in British children, who are raised in a culture markedly less religious-oriented than that of the United States. Kelemen explains these findings as “side effects of a socially intelligent mind that is naturally inclined to privilege intentional explanation and is, therefore, oriented toward explanations characterizing nature as an intentionally designed artifact.”[9]*

As we get older, we may accept other reasons for pointy rocks, but we can never really overcome the powerful drive in our minds to assign agency to inanimate objects and actions. If we’re home alone on a dark, stormy night and we hear a door creaking open in the other room, our first reaction is fear that it might be an intruder, not that it’s just the wind blowing the door open. We have, as Atran puts it, “a naturally selected cognitive mechanism for detecting agents – such as predators, protectors, and prey,” and this mechanism is “trip-wired to attribute agency to virtually any action that mimics the stimulus conditions of natural agents: faces on clouds, voices in the wind, shadow figures, the intentions of cars or computers, and so on.”[10] It’s clear how these “agency detector” systems have served a powerful evolutionary purpose: if it was in fact just the wind blowing the door, there’s no harm in making a mistake other than a brief surge of adrenaline; if however, it really was an intruder in your house but you assumed it was just the wind, the mistake you made could possibly cost you your life.

More generally, the heightened risk of not identifying when another person is the cause of something has led to our universal tendency towards rampant anthropomorphism. Stewart Guthrie, author of a book aptly named Faces in the Clouds: A New Theory of Religion, argues that “anthropomorphism may best be explained as the result of an attempt to see not what we want to see or what is easy to see, but what is important to see: what may affect us for better or worse.” Because of our powerful anthropomorphic tendency, “we search everywhere, involuntarily and unknowingly, for human form and results of human action, and often seem to find them where they do not exist.”[11]

When our anthropomorphic tendency is applied to religious thought, what’s notable is that it’s the human mind, rather than any other aspect of humans, that’s universally applied to spirits and gods. Anthropologist Pascal Boyer notes that “the only feature of humans that is always projected onto supernatural beings is the mind.”[12] This, of course, makes sense in light of the evolutionary development of theory of mind as a core underpinning of our social intelligence.[13] This is the first time that we see a dynamic (which we will see again later in this book) of the human mind imputing its own pfc-mediated capabilities into external constructs of its own creation. In this case, it is the power of symbolic thought that is assigned to the gods, as Guthrie describes:

How religion differs from other anthropomorphism is that it attributes the most distinctive feature of humans, a capacity for language and related symbolism, to the world. Gods are persons in large part because they have this capacity. Gods may have other important features, such as emotions, forethought, or a moral sense, but these are made possible, and made known to humans, by symbolic action.[14]

The approach to understanding religion as a spandrel clearly yields some compelling results, and casts a spotlight on how the pfc’s evolved capabilities can lead to consequences far removed from the original evolutionary causes of its powers. There are, it should be noted, other theories of the rise of religion which, while not contradictory to the spandrel approach, emphasize very different factors, such as the role of religion in maintaining social and moral cohesion in increasingly large and complex societies. However, the spandrel explanation, attractive as it is, tends to lead to a conclusion that religious thought of some kind is a likely, but not an essential part of human cognition. In Boyer’s words, it suggests “a picture of religion as a probable, although by no means inevitable by-product of the normal operation of human cognition.” [15]

In contrast to this view, I would propose that underlying the cognitive structure of religious thought is a mythic consciousness that is a natural and inevitable result of the workings of the pfc. We saw in the previous chapter that what has been conventionally termed a “language instinct” is really a more fundamental “patterning instinct” of the pfc.[16] Similarly here, as we look for the underlying driver of religious thought, we will see that the pfc’s “patterning instinct” leads as inevitably to a mythic consciousness as it does to language itself.

[1] Gould, S. J., and Lewontin, R. C. (1979). “The Spandrels of San Marco and the Panglossian Paradigm: A Critique of the Adaptationist Programme” Proceedings of the Royal Society of London, Biological Sciences. City, pp. 581-598.

[2] Atran (2002) op. cit.

[3] Atran, S., and Norenzayan, A. (2004). “Religion’s evolutionary landscape: Counterintuition, commitment, compassion, communion.” Behavioral and Brain Sciences, 27(6), 713-730.

[4] See Chapter 3, page 32 for a discussion of these abilities with respect to language.

[5] Bering, J. M. (2006). “The folk psychology of souls.” Behavioral and Brain Sciences, 29(5), 453-498.

[6] Chapter 2, page 20.

[7] Pyysiäinen, I., and Hauser, M. (2010). “The origins of religion: evolved adaptation or by-product?” Trends in Cognitive Sciences, 14(3), 104-109, citing Bloom, P. (2004) Descartes’ Baby: How the Science of Child Development Explains What Makes us Human, Basic Books. This sense of a “self” distinct from the body is discussed in more detail in Part II of this book.

[8] Boyer, P. (2003). “Religious thought and behaviour as by-products of brain function.” Trends in Cognitive Sciences, 7(3: March 2003), 119-124.

[9] Kelemen, D. (2004). “Are Children “Intuitive Theists”?: Reasoning About Purpose and Design in Nature.” Psychological Science, 15(5), 295-301; Kelemen, D., and Rosset, E. (2009). “The Human Function Compunction: Teleological explanation in adults.” Cognition, 111, 138-143. Her view is supported by Michael Tomasello who believes that “human causal understanding… evolved first in the social domain to comprehend others as intentional agents,” thus allowing our hominid ancestors to predict and explain the behavior of others in their social group.” See Tomasello, M. (2000). The Cultural Origins of Human Cognition, Cambridge, Mass.: Harvard University Press, 23-5.

[10] Atran (2002) op. cit.

[11] Guthrie, S. (1993). Faces in the Clouds: A New Theory of Religion, New York: Oxford University Press, 82-3, 187. Italics in original.

[12] Boyer, P. (2001). Religion Explained: The Evolutionary Origins of Religious Thought, New York: Basic Books, 144-5. Italics in original.

[13] See Chapter 2, page 19.

[14] Guthrie, op. cit., 198.

[15] Boyer (2003) op. cit.

[16] Chapter 3, “The ‘language instinct'”, pages 38-40.

October 5, 2010

Three stages in the evolution of language

Posted in Language and Myth tagged language, social intelligence at 11:00 pm by Jeremy

A debate has been raging for years among linguists as to whether the development of language was gradual and early or sudden and more recent. In this section of my book, Towards a Democracy of Consciousness, I argue for three stages of language evolution: (1) mimetic language beginning as long as four million years ago; (2) protolanguage emerging around 300,000 years ago (which would also have been spoken by the Neanderthals); and (3) modern language which may have begun to emerge about 100,000 years ago but probably only achieved fully modern syntax around the time of the Upper Paleolithic revolution around 40,000 years ago.

The co-evolution of language and the pfc

Imagine the world of our hominid ancestors over the four million years between Ardi and the emergence of homo sapiens. As we’ve discussed, it was a mimetic, highly social world, where increasingly complex group dynamics were developing. Communication in this world was probably a combination of touching, gestures, facial expressions, and complex vocalizations, including the many kinds of grunts, growls, shrieks and laughs that we still make to this day. Terrence Deacon believes that it was in this world that “the first use of symbolic reference by some distant ancestors changed how natural selection processes have affected hominid brain evolution ever since.”[1] He argues that “the remarkable expansion of the brain that took place in human evolution, and indirectly produced prefrontal expansion, was not the cause of symbolic language but a consequence of it.”[2]

Enhanced prefrontal cortex was probably a major driver of evolutionary success for pre-human hominids

The findings from Kuhl may help to explain how prefrontal expansion could have been a consequence of symbolic language. If we apply what we learned about how a patterning instinct leads the brain to shape itself based on the patterns it perceives, then we can imagine how a pre-human growing up in mimetic society would hear, see and feel the complex communication going on around him, and how his pfc would shape itself accordingly. Those infants whose pfcs were able to make the best connections would be more successful at realizing how the complex mélange of grunts, rhythms, gestures and expressions they were hearing and seeing patterned themselves into meaning. As they grew up, they would be better integrated within their community and, as such, tend to be healthier and more attractive as mates, passing on their genes for enhanced pfc connectivity to the next generation. It was no longer the biggest, fastest or strongest pre-humans that were most successful, but the ones with the most enhanced pfcs. In Deacon’s words, “symbol use selected for greater prefrontalization” in an ever-increasing cycle, whereby “each assimilated change enabled even more complex symbol systems to be acquired and used, and in turn selected for greater prefrontalization, and so on.”[3]

Deacon’s view of this positive feedback loop between language and evolution is shared by others, including linguist Nicholas Evans who describes it as “a coevolutionary intertwining of biological evolution, in the form of increased neurological capacity to handle language, and cultural evolution, in the form of increased complexity in the language(s) used by early hominids. Both evolutionary tracks thus urge each other on by positive feedback, as upgraded neurological capacity allows more complex and diversified language systems to evolve, which in turn select for more sophisticated neurological platforms.”[4] While this view represents some of the most advanced thinking in the field, it’s interesting to see that it has a solid pedigree – as far back, in fact, as Charles Darwin himself, who wrote in 1871:

If it be maintained that certain powers, such as self-consciousness, abstraction etc., are peculiar to man, it may well be that these are the incidental results of other highly advanced intellectual faculties, and these again are mainly the result of the continued use of a highly developed language.[5]

Perhaps now, armed with this new perspective on our patterning instinct and the coevolution of language and the pfc, we are finally equipped to tackle the conundrum of when and how language actually evolved. Surely it was early and gradual after all, if Deacon and Evans are to be believed? It’s difficult to conceive how it could be anything else. But what, then, accounts for the Upper Paleolithic Great Leap Forward? How could we have had language for millions of years and not produced anything else with symbolic qualities until forty thousand years ago?

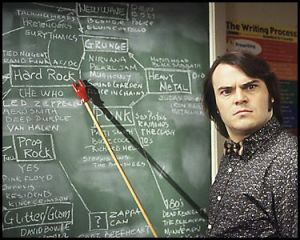

Three stages of language evolution

There is, in fact, a possible resolution to this conundrum that permits both the “gradual and early” and the “sudden and recent” camps to both be right. This approach views language as evolving in different stages, with major transitions occurring between each state, and was first proposed as a solution by linguist Ray Jackendoff in a paper entitled “Possible stages in the evolution of the language capacity.”[6] Jackendoff was well aware that his proposal could “help defuse a long-running dispute,” and he pointed out that if this approach to language evolution becomes widely accepted, it will no longer be meaningful to ask whether one or another hominid “had language,” but rather “what elements of a language capacity” did a particular hominid have.

Jackendoff proposed nine different stages of language development, but for the sake of simplicity, I’d like to suggest three clearly demarcated stages. Additionally, I think these three stages can be closely correlated to the different stages of tool technology found in the archaeological record, so an approximate timeframe can also be applied to each stage. Importantly, the last of these stages, the transition to modern language, would be contemporaneous with the Upper Paleolithic revolution, and would therefore solve the conundrum posed by the “sudden and recent” camp. If we consider the analogy of language as a “net of symbols,” then we can visualize each stage as a different kind of net: the first stage may be visualized as a small net that you might use to catch a single fish in a pond; the second stage would be analogous to a series of those small nets tied together; and the third stage could be seen as the vast kind of net that a modern trawler uses to catch fish on an industrial scale. I’ll describe each stage in turn.

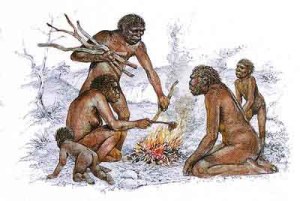

Stage 1: Mimetic language. This stage may have begun as early as Ardi, over four million years ago, and continued until slightly before the advent of modern homo sapiens, around 200,000-300,000 years ago. It would have been concurrent with what Merlin Donald calls the mimetic stage of human development, as discussed in Chapter 1. It would have involved single words that began to be used in different contexts, thus differentiating them from the vervet calls discussed earlier which only have meaning in a specific context. Examples of these words could be shhh, yes, no or hot. Jackendoff gives the example of a little child first learning language, who has learned to say the single word kitty “to draw attention to a cat, to inquire about the whereabouts of the cat, to summon the cat, to remark that something resembles a cat, and so forth.”[7] If you were to imagine a campfire a million years ago, a hominid may have pointed to a stone next to the fire and said “hot!” and then might later have caused his friends to laugh by using the same word to describe how he felt after running on a sunny day.

The correlative level of technology would have been the Oldowan and Acheulean stone tools that, as described in Chapter 2, changed very little over millions of years. Interestingly, a recent study employed brain scanning technology to analyze what parts of the brain people use when they make these kinds of stone tools. Oldowan tool-making showed no pfc activity at all, while Acheulean tools required some limited use of the pfc, activating a particular area used for “the coordination of ongoing, hierarchically organized action sequences.”[8]

Stage 2: Protolanguage. This stage, which has also been proposed by linguist Derek Bickerton,[9] may have gradually emerged around 300,000 years ago (the period when Aiello and Dunbar believe that language “crossed the Rubicon”) and remained predominant until the timeframe suggested by Noble & Davidson for the emergence of modern language, roughly 70,000-100,000 years ago. It would have involved chains of words linked together in a simple sentence, but without modern syntax. If we go back to our Stone Age campfire, imagine that the fire’s gone out, but an early human wants to tell his friends that the stones from the fire are still hot. He might point to the area and say “stone hot fire” or alternatively “fire hot stone” or even “hot fire stone.” The breakthrough from mimetic language is that different concepts are now being placed together to create a far more valuable emergent meaning, but the words are still chained together without the magic weave of syntax.

Interestingly, it was around 300,000 years ago that new advances were being made in stone tool technology, leaving behind the old Acheulean stagnation. As described by anthropologist Stanley Ambrose, “regional stylistic and technological variants are clearly identifiable, suggesting the emergence of true cultural traditions and culture areas.” The new techniques, known as Levallois technology from the place in France where they were first discovered, represent according to Ambrose “an order-of-magnitude increase in technological complexity that may be analogous to the difference between primate vocalizations and human speech.” Ambrose believes that this type of “composite tool manufacture” requires the kind of complex problem solving, planning and coordination that is mediated by the pfc, and may even have “influenced the evolution of the frontal lobe.”[10]

Stage 3: Modern language. This stage may have begun to emerge around 100,000 years ago, but possibly only achieved the magical weave of full syntax around the time of the Upper Paleolithic revolution, about 40,000 years ago. By this time, our fully human ancestor could have told his friend: “I put the stone that you gave me in the fire and now it’s hot,” with full syntax and recursion. The correlative level of technology would be the sophisticated tools associated with the Upper Paleolithic revolution, including grinding and pounding tools, spear throwers, bows, nets and boomerangs. The same brains that could handle syntax and recursion could also handle the complex planning and hierarchy of the activities required to conceptualize and make these tools.

But as we’ve seen, the Great Leap Forward involved more than sophisticated tools. It also delivered the first evidence of human behavior that’s not just purely functional but also has ritual or symbolic significance. For the first time, humans are creating art, consistently decorating their bodies, constructing musical instruments and ritual artifacts. I suggest that these innovations in the material realm are correlated to one particular aspect of language that may also have emerged at this time: the use of metaphor.

[1] Deacon, op. cit., 321-2.

[2] Ibid., 340.

[3] Ibid.

[4] Evans 2003, op. cit.

[5] Darwin (1871). The descent of man, and selection in relation to sex. Cited by Bickerton (2009) op. cit., 5.

[6] Jackendoff, R. (1999). “Possible stages in the evolution of the language capacity.” Trends in Cognitive Sciences, 3(7:July 1999), 272-279.

[7] Ibid.

[8] Stout, D., Toth, N., Schick, K., and Chaminade, T. (2008). “Neural correlates of Early Stone Age toolmaking: technology, language and cognition in human evolution.” Phil. Trans. R. Soc. Lond. B, 363, 1939-1949.

[9] Bickerton, D. (1990) Language and Species, Chicago: University of Chicago Press.

[10] Ambrose, S. H. (2001). “Paleolithic Technology and Human Evolution.” Science, 291(2 March 2001), 1748-1753.

September 20, 2010

From grooming to gossip

Posted in Language and Myth tagged language, social intelligence at 9:53 pm by Jeremy

This section of my chapter on language looks at the social networking aspects of the evolution of language. In a way, the development of language happened a lot like the recent growth of the internet. Here’s the section, from the working draft of my book, Finding the Li: Towards a Democracy of Consciousness.

From grooming to gossip

Imagine you’re standing in a cafeteria line. You hear multiple conversations around you: “… I heard that she bought it in…”, “can you believe what Joe did…”, “how much did that cost you…”, “so I said to him…”. Random, meaningless gossip. But don’t be so quick to dismiss it. What you’re hearing may be the very foundation of human language and, as such, the key to our entire human civilization.

This is the remarkable and influential hypothesis of anthropologist team Leslie Aiello and Robin Dunbar. It begins with the well-recognized fact that chimpanzees and other primates use the time spent grooming each other as an important mode of social interaction, through which they establish and maintain cliques and social hierarchies. You may recall, from the previous chapter, the “social brain hypothesis” which is based partially on the correlation noticed between primates living in larger groups and the size of their neocortex. Aiello and Dunbar ingeniously calculated how much time different species would need to spend grooming for their social group to remain cohesive. Larger groups meant significantly more time spent grooming, with some populations spending “up to 20% of their day in social grooming.” When they then calculated the group sizes that early humans probably lived in, they realized that they would have had “to spend 30-45% of daytime in social grooming in order to maintain the cohesion of the groups.” As they point out, this was probably an unsustainable amount of time. Gradually, in their view, the mimetic forms of communication discussed in the previous chapter would have grown more significant, offering a more efficient form of social interaction than grooming, until finally developing into language.[1]

Researchers have also suggested that the miracle weave of language – its syntax – may have arisen from the complexity of social interactions. “In fact,” they say, “the bulk of our grammatical machinery enables us to engage in the kinds of social interaction on which the efficient spread of these tasks would have depended. We can combine sentences about who did what to whom, who is going to do what to whom, and so on, in a fast, fluent and largely unconscious way. This supports the notion that language evolved in a highly social, potentially cooperative context.”[2]

Until now, we’ve been looking at language from the point of view of how an individual’s brain understands it and uses it. But the crucial importance of the social aspect of language suggests that we also need to view it from the perspective of a network. Many theorists, including Merlin Donald, see this perspective as all-important, placing “the the origin of language in cognitive communities, in the interconnected and distributed activity of many brains.”[3] Words like “interconnected” and “distributed” bring to mind the recent phenomenon of the rise of the internet, and this is no accident. In many ways, the dynamics of language evolution offer an ancient parallel to the explosive growth of the internet. One person could no more come up with language than one computer could create the internet. In each case, the individual network node – the human brain or the individual computer – needed to achieve enough processing power to participate in a meaningful network, but once that network got going, it became far more important as a driver of change than any individual node.

Another interesting parallel between language and internet evolution is that, in both cases, their growth was self-organized, an emergent network arising from a great many unique interactions without a pre-ordained design. Linguist Nicholas Evans points out that “language structure is seen to emerge as an unintentional product of intentional communicative acts, such as the wish to communicate or to sound (or not sound) like other speakers.”[4] Donald notes that, through these group dynamics, the complexity of language can become far greater than any single brain could ever design. “Highly complex patterns,” he writes, “can emerge on the level of mass behavior as the result of interactions between very simple nervous systems… Language would only have to emerge at the group level, reflecting the complexity of a communicative environment. Brains need to adapt to such an environment only as parts of a distributed web. They do not need to generate, or internalize, the entire system as such.”[5]

Donald compares this dynamic to how an ant colony can demonstrate far more intelligence than any individual ant. We’ll explore in more detail this kind of self-organized intelligence in the second section of this book, but at this point, the time has come to consider what these perspectives might bring to the unresolved question of when language actually arose in our history.

[1] Aiello, L. C., and Dunbar, R. I. M. (1993). “Neocortex Size, Group Size, and the Evolution of Language.” Current Anthropology, 34(2), 184-193.

[2] Szathmary, E., and Szamado, S. (2008), op. cit.

[3] Donald, op. cit., 253-4.

[4] Evans, N. (2003). “Context, Culture, and Structuration in the Languages of Australia.” Annual Review of Anthropology(32: 2003), 13-40.

[5] Donald, op. cit., 284.

August 18, 2010

So what really makes us human?

Posted in Language and Myth tagged cognitive history, mimesis, mirror neurons, prefrontal cortex, social intelligence, symbolization, theory of mind at 10:39 pm by Jeremy

Here’s a pdf file of Chapter 2 of my book draft, Finding the Li: Towards a Democracy of Consciousness. This chapter’s called “So What Really Makes Us Human?” It traces human evolution over several million years, reviewing Merlin Donald’s idea of mimetic culture and the crucial breakthrough of “theory of mind.” It examines the “social brain hypothesis” and explores the current thinking in “altruistic punishment” as a key to our unique human capability for empathy and group values. Finally, it looks at how social intelligence metamorphosed into cognitive fluidity, and how the pfc’s newly evolving connective abilities enabled humans to discover the awesome power of symbols.

Open the pdf file of Chapter 2: “So What Really Makes Us Human?”

As always, constructive comments and critiques from readers of my blog are warmly welcomed.

August 17, 2010

What the pfc did for early humans

Posted in Language and Myth tagged cognitive history, executive function, prefrontal cortex, social intelligence, symbolization at 10:49 pm by Jeremy

This section of my book draft, Finding the Li: Towards a Democracy of Consciousness, looks at how the unique powers of the prefrontal cortex gave early humans the capability to construct tools, exercise self-control, and begin to control aspects of the environment around them. But most of all it gave them the power of symbolic thought, which has become the basis of all human achievement since then. As always, constructive comments are welcomed.

What the pfc did for early humans

Mithen’s “cognitive fluidity” and Coolidge and Wynn’s “enhanced working memory” are really two different ways of describing the same basic dynamic of the pfc connecting up diverse aspects of the mind’s intelligence to create coherent meaning that wasn’t there before. But what specifically did this enhanced capability do for our early human ancestors?

To begin with, it enabled us to make tools. It used to be conventional wisdom that humans are the only tool-makers, so much so that the earliest known genus of the species homo, which lived around two million years ago, is named homo habilis, or “handy man.” Then, in the 1960s, Jane Goodall discovered that chimpanzees also used primitive tools, such as placing stalks of grass into termite holes. When Goodall’s boss, Louis Leakey, heard this, he famously replied “Now we must now redefine ‘tool’, redefine ‘man’, or accept chimpanzees as humans!”[1] Well, as we’ve seen in the preceding pages, there’s been plenty of work done in redefining “man” since then, but none of this takes away from the fact that humans clearly use tools vastly more effectively than chimpanzees or any other mammals.

To be fair to our old “handy man” homo habilis, even the primitive stone tools they left behind, called Oldowan artifacts after the Olduvai Gorge in East Africa where they were first found, represented a major advance in working memory over our chimpanzee cousins. Steve Mithen has pointed out that some Oldowan tools were clearly manufactured to make other tools, such as “the production of a stone flake to sharpen a stick.”[2] Making a tool to make another tool is unknown in chimpanzees, and requires determined planning, holding the idea of the second tool in your working memory while you’re preparing your first tool. Oldowan artifacts remained the same for a million years, so even though they were an advance over chimp technology, there was none of the innovation that we associate with our modern pfc functioning. The next generation of tools, called the Acheulian industry, required more skillful stone knapping, and show attractive bilateral symmetry, but they also remained the same for another million years or so.[3] It was around three hundred thousand years ago, shortly before anatomically modern humans emerged, that stone knapping really took off, with stone-tipped spears and scrapers with handles representing “an order-of-magnitude increase in technological complexity.”[4]

None of these tools – even the more primitive Oldowan and Acheulian – can be made by chimpanzees, and they could never have existed without the power of abstraction provided by the pfc.[5]* Planning for this kind of tool-making required a concept of the future, when the hard work put into making the tool would turn out to be worthwhile. As psychologists Liberman and Trope have pointed out, transcending the present to mentally traverse distances in time and in space “is made possible by the human capacity for abstract processing of information.” Making function-specific tools, they note, “required constructing hypothetical alternative scenarios of future events,” which could only be done through activating a “brain network involving the prefrontal cortex.”[6]

Another fundamental human characteristic arising from this abstraction of past and future is the power of self-control. As one psychologist observes, “self-control is nearly impossible if there is not some means by which the individual is capable of perceiving and valuing future over immediate outcomes.”[7] Anyone who has watched children grow up and gradually become more adept at valuing delayed rewards over immediate gratification will not be surprised at the fact that the pfc doesn’t fully develop in a modern human until her early twenties.

This abstraction of the future gave humans not only the power to control themselves but also to control things around them. A crucial pfc-derived human characteristic is the notion of will, the conscious intention to perform a series of activities, sometimes over a number of years, to achieve a goal. Given the fundamental nature of this capability, it’s not surprising that, as Tomasello points out, in many languages the word that denotes the future is also the word “for such things as volition or movement to a goal.” In English, for example, the original notion of “I will it to happen” is embedded in the future tense in the form “It will happen.”[8]

This is already an impressive range of powerful competencies made available to early humans by the pfc. But of all the powers granted to humans by the awesome connective faculties of the pfc, there seems little doubt that the most spectacular is the power to understand and communicate sets of meaningful symbols, known as symbolization.

The symbolic net of human experience

A full generation before Louis Leakey realized it was time to “redefine man,” a German philosopher named Ernst Cassirer who had fled the Nazis was already doing so, writing in 1944 that “instead of defining man as an animal rationale we should define him as an animal symbolicum.”[9] He wasn’t alone in this view. A leading American anthropologist, Leslie White, also believed that the “capacity to use symbols is a defining quality of humankind.”[10] Because of our use of symbols, Cassirer wrote, “compared with the other animals man lives not merely in a broader reality; he lives, so to speak, in a new dimension of reality.”[11]

Why would the use of symbols take us to a different dimension of reality? First, it’s important to understand what exactly is meant by the word “symbol.” In the terminology adopted by cognitive anthropologists, we need to differentiate between an icon, an index, and a symbol. A simple example may help us to understand the differences. Suppose it’s time for you to feed your pet dog. You open your pantry and look at the cans of pet food available. Each can has a picture on it of the food that’s inside. That picture is known as an icon, meaning it’s a “representative depiction” of the real thing. Now, you open the can and your dog comes running, because he smells the food. The smell is an index of the food, meaning it’s “causally linked” to what it signifies. But now suppose that instead of giving your hungry dog the food, you wrote on a piece of paper “FOOD IN TEN MINUTES” and put it in your dog’s bowl. That writing is a symbol, meaning that it has a purely arbitrary relationship to what it signifies, that can only be understood by someone who shares the same code. Clearly, your dog doesn’t understand symbols, and now he’s pawing at the pantry door trying to get to his food.[12]*

To understand how symbols arose, and why they are so important, it helps to begin with the notion of working memory discussed earlier. Terrence Deacon has suggested that symbolic thought is “a way of offloading redundant details from working memory, by recognizing a higher-order regularity in the mess of associations, a trick that can accomplish the same task without having to hold all the details in mind.”[13] Remember the image of working memory as a blackboard? Now imagine a teacher asking twenty-five children to come up and write on the blackboard what they had to eat that morning before they came to school. The blackboard would quickly fill up with words like cereals and eggs, pancakes and waffles. Now, suppose that, once the blackboard’s filled up, the teacher erases it all and just writes on the blackboard the word “BREAKFAST”. That one word, by common consent, symbolizes everything that had previously been written on the blackboard. And now it’s freed up the rest of the blackboard for anything else.

That’s the powerful effect that the use of symbols has on human cognition. But there’s another equally powerful aspect of writing that one word “BREAKFAST” on the blackboard. Every schoolchild has her own experience of what she ate that morning, but by sharing in the symbol “BREAKFAST,” she can rise above the specifics of her own particular meal and understand that there’s something more abstract that is being communicated, referring to the meal all the kids had before they came to school regardless of what it was. For this reason, symbols are an astonishingly powerful means of communicating, allowing people to transcend their individual experiences and share them with others. Symbolic communication can therefore be seen as naturally emerging from human minds evolving on the basis of social intelligence. This has led one research team to define modern human behavior as “behavior that is mediated by socially constructed patterns of symbolic thinking, actions, and communication.”[14]

Once it got going, symbolic thought became so powerful that it pervaded every aspect of how we think about the world. In Cassirer’s words:

Man cannot escape from his own achievement… No longer in a merely physical universe, man lives in a symbolic universe. Language, myth, art, and religion are parts of this universe. They are the varied threads which weave the symbolic net, the tangled web of human experience. All human progress in thought and experience refines upon and strengthens this net.[15]

Because of our symbolic capabilities, Deacon adds, “we humans have access to a novel higher-order representation system that… provides a means of representing features of a world that no other creature experiences, the world of the abstract.” We live our lives not just in the physical world, “but also in a world of rules of conduct, beliefs about our histories, and hopes and fears about imagined futures.” [16]

For all the power of symbolic thought, there was one crucial ingredient it needed before it could so dramatically take over human cognition. It needed a means by which each individual could agree on the code to be used in referencing what they meant. It had to be a code which everyone could learn and that could be communicated very easily, taking into account the vast array of different things that could carry symbolic meaning. In short, it needed language – that all-encompassing network of symbols that we’ll explore in the next chapter.

[1] Cited in McGrew, W. C. (2010). “Chimpanzee Technology.” Science, 328, 579-580.

[2] Mithen 1996, op. cit., 96.

[3] Proctor, R. N. (2003). “The Roots of Human Recency: Molecular Anthropology, the Refigured Acheulean, and the UNESCO Response to Auschwitz.” Current Anthropology, 44(2: April 2003), 213-239.

[4] Ambrose, S. H. (2001). “Paleolithic Technology and Human Evolution.” Science, 291(2 March 2001), 1748-1753.

[5] Mithen 1996, op. cit., p. 97 relates a failed attempt to get a famous bonobo named Kanzi, who was very advanced in linguistic skills, to make Oldowan-style stone tools.

[6] Liberman, N., and Trope, Y. (2008). “The Psychology of Transcending the Here and Now.” Science, 322(21 November 2008), 1201-1205.

[7] Barkley, op. cit.

[8] Tomasello, op. cit., p. 43.

[9] Cassirer, E. (1944). An Essay on Man, New Haven: Yale University Press, 26.

[10] Cited by Renfrew, C. (2007). Prehistory: The Making of the Human Mind, New York: Modern Library: Random House, 91.

[11] Cassirer, op. cit.

[12] The distinction, originally made by American philosopher Charles Sanders Peirce, is described in detail in Deacon, T. W. (1997). The Symbolic Species: The Co-evolution of Language and the Brain, New York: Norton; and is also referred to by Noble, W., and Davidson, I. (1996). Human Evolution, Language and Mind: A psychological and archaeological inquiry, New York: Cambridge University Press. I am grateful to Noble & Davidson for the powerful image of writing words to substitute for food in the dog’s bowl as an example of a symbol.

[13] Deacon, op. cit., p. 89.

[14] Henshilwood, C. S., and Marean, C. W. (2003). “The Origin of Modern Human Behavior: Critique of the Models and Their Test Implications”Current Anthropology. City, pp. 627-651.

[15] Cassirer, op. cit.

[16] Deacon, op. cit., p. 423.

August 10, 2010

From social intelligence to cognitive fluidity

Posted in Language and Myth tagged prefrontal cortex, social intelligence at 9:47 pm by Jeremy

This section of my book draft, Finding the Li: Towards a Democracy of Consciousness, examines how humans originally developed a social intelligence which evolved over time to what’s been called “cognitive fluidity” by renowned archaeologist Steve Mithen. It ties in Mithen’s view with the theory of Coolidge & Wynn that enhanced working memory is responsible for this unique aspect of human cognition. As always, constructive comments are welcomed.

From social intelligence to cognitive fluidity

Whether our social intelligence has caused us to be fundamentally cooperative, competitive, or both, there’s one aspect of it that most researchers can agree on: it’s driven by the actions of the pfc. And increasingly, it’s believed that most of the special capabilities of the pfc emerged from its evolution as a tool of social intelligence. Tomasello, among others, speculates that “the evolutionary adaptations aimed at the ability of human beings to coordinate their social behavior with one another” is what underlies “the ability of human beings to reflect on their own behavior and so to create systematic structures of explicit knowledge.”[1] Another researcher notes that “the neuropsychological functions that create the capacity for culture are very much akin to those capacities attributed to executive functioning—inhibition, self-awareness, self-regulation, imitation and vicarious learning.”[2]

In this view, many of our unique abilities that are mediated by the pfc – abstract thinking, rule-making, mental time travel into the past and the future – arose not because they were in themselves vital for human adaptation but as an accidental by-product of our social cognitive skills. Surprisingly, this phenomenon has been found to be fairly common in evolution, and has been given the name “exaptation,” meaning a characteristic that evolved for other usages and later got co-opted for its current role. A classic example of exaptation is bird feathers, which are thought to have originally evolved for regulation of body heat and only later became used as a means of flying.[3]

What is it, then, about the pfc that could take a set of social cognitive skills and transform them into an array of such varied and astonishing capabilities? One answer to this question might be that the pfc is connected to virtually all other parts of the brain, and this gives it the unique capability to merge different inputs, such as vision and hearing, instinctual urges, emotions and memories, into one integrated story. This has led one research team to speculate that the “outstanding intelligence of humans” may result not from “qualitative differences” compared with other primates, but from the pfc’s combination of the same functions which may have developed separately in other species.[4] In fact, the human pfc’s connectivity is dramatically greater than that of other primates. The celebrated neuroscientist, Jean-Pierre Changeux, notes that “from chimpanzee to man one observes an increase of at least 70 percent in the possible connections among prefrontal workspace neurons – undeniably a change of the highest importance.”[5]

Archaeologist Steve Mithen has proposed an influential theory of human evolution on this basis.[6] Mithen begins with the premise that early hominids may have developed specialized, or “domain-specific” skills. For example, they may have developed social intelligence (as discussed above), technical intelligence for tool-making, or increasing knowledge about the natural world, but they were unable to connect these intelligences together. It’s helpful to imagine these domain-specific intelligences like the blades and tools in a Swiss army knife. You can use each of them, but you’d be hard pressed to use them all together at the same time.[7] But, Mithen suggests, at some time in the development of the modern human mind, we developed what he calls “cognitive fluidity,” whereby we started combining these domain-specific intelligences into an integrated meta-intelligence. He gives an example of Neanderthals who may have been socially intelligent and technically able to make clothes and jewellery, but only modern humans, in his view, made the evolutionary jump to combine these skills and make their artefacts in a particular way to “mediate those social relationships.”[8]*

Another research team, Coolidge and Wynn, have focused their attention on a particular pfc capability, known as “working memory,” which may have been the linchpin to permit this kind of cognitive fluidity in humans.[9] Working memory is the ability to consciously “hold something in your mind” for a short time. For example, if someone tells you a phone number and you have to go across the room to write it down, you’ll use your working memory to hold it in your mind until it’s down on paper, at which point it’s freed up for something else. But working memory is far more than just “short-term memory.” Comparable to the random access memory (“RAM”) of a computer, it’s the process used by the mind to keep enough discrete items up and running so they can be joined together to arrive at a new understanding or a new plan. It’s been referred as a “global workspace… onto which can be written those facts that are needed in a current mental program,”[10] or perhaps more concisely, “the blackboard of the mind.”[11] Changeux notes that there is “a very clear difference between … higher primates and man with regard to the quantity of knowledge that they are capable of holding on working memory for purposes of evaluation and planning,”[12] and Coolidge and Wynn have gone on to argue that it’s the enhanced working memory of humans that’s the crucial differentiating factor for our uniqueness.

[1] Tomasello, op. cit. p. 197.

[2] Barkley, R. A. (2001). “The Executive Functions and Self-Regulation: An Evolutionary Neuropsychological Perspective.” Neuropsychology Review, 11(1), 1-29.

[3] Gould, S. J., and Vrba, E. S. (1982). “Exaptation – A Missing Term in the Science of Form.” Paleobiology, 8(1: Winter 1982), 4-15.

[4] Roth, G., and Dicke, U. (2005). “Evolution of the brain and intelligence.” Trends in Cognitive Sciences, 9(5: May 2005), 250-253.

[5] Changeux, J.-P. (2002). The Physiology of Truth: Neuroscience and Human Knowledge, M. B. DeBevoise, translator, Cambridge, Mass.: Harvard University Press, 108-9.

[6] Mithen, S. (1996). The Prehistory of the Mind, London: Thames & Hudson.

[7] The Swiss army knife metaphor is attributed to Leda Cosmides & John Tooby in Mithen, op. cit. 42.

[8] It should be noted that, although Mithen contrasts modern humans with Neanderthals, the cognitive difference between the two is a matter of great controversy. See Zilhao, J. (2010). “Symbolic use of marine shells and mineral pigments by Iberian Neandertals.” PNAS, 107(3), 1023-1028, for an argument that the difference between the two was demographic/social rather than genetic/cognitive. However, the Neanderthal issue is not crucial to Mithen’s underlying thesis.

[9] Coolidge, F. L., and Wynn, T. (2001). “Executive Functions of the Frontal Lobes and the Evolutionary Ascendancy of Homo Sapiens.” Cambridge Archaeological Journal, 11(2:2001), 255-60. See also: Coolidge, F. L., and Wynn, T. (2005). “Working Memory, its Executive Functions, and the Emergence of Modern Thinking.” Cambridge Archaeological Journal, 15(1), 5-26.

[10] Duncan, J. (2001). “An Adaptive Coding Model of Neural Function in Prefrontal Cortex.” Nature Reviews: Neuroscience, 2, 820-829.

[11] Patricia Goldman-Rakic quoted by Balter, M. (2010). “Did Working Memory Spark Creative Culture?” Science, 328, 160-163

[12] Changeux, op. cit.

August 6, 2010

Altruistic punishment

Posted in Language and Myth tagged social intelligence at 4:10 pm by Jeremy

Here’s the fifth section of the Chapter 2 draft of my book, Finding the Li: Towards a Democracy of Consciousness. This section discusses our evolved drive for “altruistic punishment” and how that may have overcome the “free rider” problem discussed in the previous section, permitting our social intelligence to evolved altruistically as well as competitively.

Altruistic punishment

Imagine you’re sitting alone in a room. In the next room is someone else, whom you don’t know. You’re never going to meet each other. A researcher walks in holding a hundred dollars and tells you that this sum will be split between you and the stranger in the other room. And the good news is, you’re allowed to decide exactly how you want to split it. But there’s a catch. You can only propose one split. The person in the other room will be told the split and can either accept it or reject it. If he accepts it, the money is shared accordingly. If he rejects it, you’ll both get nothing.

Welcome to the ultimatum game. If you’re like most people, you’ll decide to split the hundred dollars down the middle, so you get $50, the other person will clearly accept his $50, and you’ll both be ahead. Researchers view the ultimatum game as convincing evidence that refutes the earlier view of humans as fundamentally self-interested. If that were the case, then you (“the proposer”) would be more likely to keep $90 and offer $10 to the other stranger (“the responder”). The responder would be likely to accept $10 because, being self-interested, he would be happier with $10 than nothing. But that’s not what people do. Responders in fact frequently reject offers below $30, and the most popular amount offered by proposers is $50.[1]

It seems that we humans have a powerfully evolved sense of fairness. So powerful, in fact, that we would rather walk away with nothing than permit someone else to take extreme advantage of us. Researchers call this “altruistic punishment.” But even altruistic punishment is not powerful enough by itself to overcome the free rider problem in human groups. Think back to the Ardi situation. Suppose that sneaky free rider has skulked back to camp and is coming on strongly to one of the females whose partner is out hunting. But there’s another male who had stayed home and sees what’s going on. What does he do? Does he confront the free rider, possibly risking his own life? Or does he turn away and do nothing? This has been called by researchers the problem of the “non-punisher.” In a way, if someone lets a free rider get away with things without punishing him, they’re really a free-rider too and deserve to be punished. When modeling these situations, the researchers have indeed found that cooperation can be maintained in sizable groups indefinitely, but only in situations where both free riders and “non-punishers” are punished. These groups would tend to be more effective than groups of self-interested individuals, and their members would be more likely to pass their genes on to later generations.[2]

Thus, the possibility exists that, over thousands of generations, our social intelligence was molded by cooperative group dynamics to evolve an innate sense of fairness, and a drive to punish those who flagrantly break the rules, even if it’s at our own expense. Some researchers have gone so far as to argue that this evolved sense of fairness has led to “the evolutionary success of our species and the moral sentiments that have led people to value freedom, equality, and representative government.”[3]

[1] Gintis et al., op. cit.

[2] Fehr and Fischbacher, op. cit.

[3] Gintis et al., op. cit.

August 4, 2010

The Social Brain Hypothesis

Posted in Language and Myth tagged social intelligence at 4:35 pm by Jeremy

Here’s the fourth section of the Chapter 2 draft of my book, Finding the Li: Towards a Democracy of Consciousness. This section discusses the “social brain” hypothesis that our unique cognitive capabilities came about from dealing with the social complexity of our lives. All constructive comments from readers of my blog are greatly appreciated.

The Social Brain Hypothesis

Around the same time that Premack and Woodruff were coining the phrase “theory of mind,” a series of ground-breaking studies were forming the idea that primate intelligence “evolved not to solve physical problems, but to process and use social information, such as who is allied with whom and who is related to whom, and to use this information for deception.”[1] In the context of what we learned about Ardi’s circumstances 4.4. million years ago, this certainly makes sense. Venturing out on the savannah, hominids couldn’t defend themselves alone against hungry carnivores and banded together for safety. As they did so, they faced ever-increasing cognitive demands from being in bigger social groups. And it wasn’t just the size of the group, but the complexity of the lifestyle that increased. If you went out with your buddies on a foraging group, how could you make sure that no-one who remained behind would make a pass at your partner?

Decades of research on this subject have, in fact, shown that species of monkeys and apes that typically live in larger groups also have a larger neocortex (the more recently evolved part of the brain that houses the pfc).[2] Even outside of primates, this correlation seems to exist. A recent study of hyenas, for example, shows that the spotted hyena, which lives in more complex societies, has “far and away the largest frontal cortex” of all the different hyena species.[3] For this reason, experts in the field are comfortable stating that “the balance of evidence now clearly favors the suggestion that it was the computational demands of living in large, complex societies that selected for large brains.”[4]

In the early days of the social brain hypothesis, the emphasis was on the competitive aspect of living in complex societies. Researchers would talk about “primate politics” and the name of one book on the subject was Machiavellian Intelligence: Social Evolution in Monkeys, Apes and Humans.[5] In the view of an influential thinker on the subject, Richard Alexander, as hominids became more dominant in their ecology, they no longer needed to evolve better capabilities to deal with the natural environment. Instead, they began to evolve new cognitive skills in order to outcompete each other. In this way, we became (in his words) our own “hostile force of nature,” entering into a “social arms race” with each other.[6] Alexander saw our ancestors as playing a “mental chess game” with the other members of their group, “predicting future moves of a social competitor… and appropriate countermoves”:

In this situation, the stage is set for a form of runaway selection, whereby the more cognitively, socially, and behaviorally sophisticated individuals are able to out maneuver and manipulate other individuals to gain control of resources in the local ecology and to gain control of the behavior of other people…[7]

Outmaneuvering, manipulation and control… are these then the defining characteristics of our human uniqueness? If it sounds bleak, it falls well within a tradition that has interpreted Darwin’s original theory of evolution from the same chilling perspective. As described by some supporters of Alexander’s theory:

The conceptualization of natural selection as a ‘struggle for existence’ of Darwin and Wallace becomes, in addition, a special kind of struggle with other human beings for control of the resources that support life and allow one to reproduce.[8]

While Alexander was honing his theory of the “social arms race,” another biologist, Robert Trivers, was explaining how, from an evolutionary perspective, altruism was really just a sophisticated form of selfishness. In a much cited paper, he described what he called “reciprocal altruism” as an ancient evolutionary strategy that could be seen in the behavior of fish and birds, and he interpreted human altruism in the same way. “Under certain circumstances,” he wrote, “natural selection favors these altruistic behaviors because in the long run they benefit the organism performing them.”[9]

This approach is fully consistent with what’s become generally known as the “selfish gene” interpretation of evolution, as popularized by biologist Richard Dawkins. In this view (which is extensively critiqued later in this book) all evolution can be explained by the “selfish” drive of our genes to replicate themselves. And those special human characteristics that we value so highly are no exception. “Let us try to teach generosity and altruism,” Dawkins suggests, “because we are born selfish.” Alexander himself comes to a similar conclusion, proposing that “ethics, morality, human conduct, and the human psyche are to be understood only if societies are seen as collections of individuals seeking their own self-interest.”[10]

However, in recent years, there’s been an important shift in our understanding of these social dynamics. What has come to seem more remarkable to researchers is not how our bigger brains made us socially competitive, but how they made us more cooperative with each other. In fact, Tomasello sees this as the key differentiating factor between the social intelligence of humans and that of other primates. According to him, it’s the chimpanzees, not the humans, who are obsessed with competing against each other. “Among primates,” he writes, “humans are by far the most cooperative species, in just about any way this appellation is used.”[11] For this reason, Tomasello argues, the “social competition” view may have driven the evolution of primate intelligence, but the cognitive skills that have enabled humans alone to develop language, culture and civilization have been “driven by, or even constituted by, social cooperation.”

Tomasello and his colleague, Henrike Moll, focus on a uniquely human dynamic that they call “shared intentionality,” which is our ability to realize that another person is seeing the same thing we’re seeing, but that they’re also seeing it from a different perspective. “The notion of perspective – we are experiencing the same thing, but potentially differently — is,” Moll and Tomasello believe, “unique to humans and of fundamental cognitive importance.” In their view, it was the special cooperation arising from shared intentionality that “transformed human cognition from a mainly individual enterprise into a mainly collective cultural enterprise involving shared beliefs and practices.”[12]

The idea that we humans evolved a sense of true altruism, where we’re driven to cooperate with our social group by a natural disposition that transcends our selfish needs, may be attractive to some, but it has been shown to have one fundamental flaw: the free-rider problem. Let’s go back to the Ardi example of the band of males venturing out on a multi-day mission into the savannah looking for meat. If one of those males secretly sneaks back to camp and makes out with the females still there, then his genes will be the ones that survive. Evolutionary researchers have, in fact, modeled this problem using game theory and tested real examples in the lab using the famous “prisoner’s dilemma” game[13]*, and have confirmed that only “a few selfish players suffice to undermine the cooperation” of those who trusted each other.[14]

Does this mean that Alexander and company were right, and in fact human social intelligence must be explained by selfish competition? Not so fast. Those same researchers have spent years modeling more realistic versions of what life may have been like for early hominids evolving their social skills, and have arrived at a more sophisticated view of innate human cooperation that they have called “altruistic punishment.”

[1] Emery, N. J., and Clayton, N. S. (2004). “The Mentality of Crows: Convergent Evolution of Intelligence in Corvids and Apes.” Science, 306, 1903-1907.

[2] Dunbar, R. I. M., and Shultz, S. (2007). “Evolution in the Social Brain.” Science, 317(7 September 2007).

[3] Zimmer, C. (2008). “Sociable, and Smart” The New York Times, (March 4, 2008).

[4] Dunbar and Shultz, op. cit.

[5] Cited by Moll, H., and Tomasello, M. (2007). “Cooperation and human cognition: the Vygotskian intelligence hypothesis.” Phil. Trans. R. Soc. Lond. B, 362(1480), 639-648.

[6] Cited by Flinn et al., op. cit.

[7] Ibid.

[8] Ibid.

[9] Trivers, R. L. (1971). “The Evolution of Reciprocal Altruism.” The Quarterly Review of Biology, 46(1), 35-57.

[10] Quoted in Gintis, H., Bowles, S., Boyd, R., and Fehr, E. (2003). “Explaining altruistic behavior in humans.” Evolution and Human Behavior, 24(3), 153-172.

[11] Moll and Tomasello, op. cit.

[12] Ibid.

[13] The prisoner’s dilemma game is a fundamental problem in game theory, where if two people cooperate they both gain a high payoff; but if one player betrays the other who is still cooperating, the betrayer wins everything and the cooperator nothing; if both betray each other, they both get a low payoff.

[14] Fehr, E., and Fischbacher, U. (2003). “The nature of human altruism.” Nature, 425, 785-791.

July 23, 2010

So what really makes us human?

Posted in Language and Myth tagged social intelligence at 10:00 pm by Jeremy

Here’s the beginning of the Chapter 2 draft of my book, Finding the Li: Towards a Democracy of Consciousness. This section covers C. Owen Lovejoy’s theory of an “early hominid adaptive suite” arising from the discovery of Ardi (Ardipithecus ramidus). All constructive comments from readers of my blog are greatly appreciated.

[Click here for a pdf version of Chapter 1]

Chapter 2: So What Really Makes Us Human?

Elephants have trunks; giraffes have necks; anteaters have tongues. What do we humans have that makes us unique? If you’ve read the first chapter of this book, you’re probably ready to answer: our pfc. Right, but other mammals also have pfcs, and advanced primates such as chimpanzees and bonobos[1]* have pfcs almost the same size as ours. So what specifically was the change that led humans to populate the world with seven billion of us, building cities, surfing the internet, and sending rockets into space, while chimps and bonobos still live in the jungle and face the threat of extinction?

There are in fact a whole mess of things that are biologically different about humans when compared with other primates. There’s very little difference in size between males and females (known as “reduced sexual dimorphism.”) We walk upright on two legs (“bipedality”). We’re capable of fine manipulation with our hands and can throw projectiles accurately and powerfully. We have much smaller upper canine teeth. We have larger brains, factoring in the size of our bodies (known as “encephalization quotient.”) Our infants are helpless for years, relying entirely on adult support. Females don’t show any signs when they’re ovulating (known as “concealed ovulation”) and experience menopause. And we’re virtually hairless, except for our heads and genitals.[2] Now, this seems like a highly varied set of differences. Which of these were the major drivers on the road to worldwide domination?

A basic timeframe may help to crystallize this question. It’s now generally agreed, as a result of genetic sequencing, that we humans shared our last common ancestor with chimpanzees and bonobos a little more than six million years ago. By way of perspective, that’s about the same time frame that horses and zebras, lions and tigers, and rats and mice also shared their common ancestors. If you just look at a physical comparison between humans and chimpanzees, that doesn’t seem too unreasonable. But it you look at the massive differences in what we’ve accomplished, there’s clearly something else going on. Michael Tomasello, a leading figure in the field of evolutionary anthropology, makes the point that “if we are searching for the origins of uniquely human cognition, therefore, our search must be for some small difference that made a big difference, some adaptation, or small set of adaptations, that changed the process of primate cognitive evolution in fundamental ways.”[3] But where does the search begin?

A plausible story of our ancestors

In 2009, a major worldwide event occurred in the usually quiet and dusty corridors of evolutionary archaeology. In what was billed as the “breakthrough of the year,”[4] Science magazine published a slew of articles on a newly discovered human ancestor which lived 4.4 million years ago in Ethiopia, known as Ardipithecus ramidus, or more affectionately, Ardi. What made the find so noteworthy was that enough remains had been found to reconstruct Ardi’s whole skeleton, which a large team had painstakingly done over fifteen years of intense excavation and analysis. Ardi’s reconstructed skeleton surprised conventional wisdom because, even though she was fairly close in time to our last common ancestor, she was already walking upright, as opposed to the knuckle-walking or swinging from tree branches that modern chimpanzees do.[5]

In that issue of Science, team leader C. Owen Lovejoy used his findings to suggest a plausible story for a set of new behaviors that launched our ancestors on the road to homo sapiens, which he called “an early hominid adaptive suite.”[6] In an analysis that rivals the best detective stories, he focused on three key characteristics differentiating Ardi and other later hominids from chimpanzees: bipedality; the loss of the big upper canine teeth that other primates have; and female concealed ovulation. What on earth could these three apparently unrelated developments have in common?

Lovejoy’s story begins with the thinning out of dense tropical vegetation due to changing climate. Ardi is thought to have lived in woodlands with small patches of forest. In order to get enough to eat, Ardi’s species were becoming omnivores, increasingly leaving the cover of the woodlands to venture out onto the plains, most likely scavenging for carrion that other predators had left behind. But the plains were a dangerous place for hominids that were used to living in the forest. Most predators could easily outrun them and cut them down. So, it made more sense for groups of males to cooperate closely with each other, going out together on multi-day foraging missions in the savanna. On successful missions, the males could bring meat back to their families, which would become an increasingly important source of fuel. Bringing back provisions was a lot easier if you could walk upright, using your arms for carrying, and this may have accelerated the evolution of bipedality. Gradually, the females began to choose males who were good providers for them and their infants, instead of the traditional choice of the most aggressive male. As cooperation between males became more important than aggressive competition, those large upper canines, used mostly for fighting rivals, became less important. In fact, females may have started to select mates with smaller upper canines, preferring a mate that would focus more on bringing back the bacon.

But there was still one problem with this otherwise happy scenario. No male in his right mind is going to go foraging with his buddies for days at a time if he sees his partner is in heat when he’s leaving. He’d be spending his whole time worrying about who’s getting into bed with her while he’s gone. This is where the development of concealed ovulation becomes so important. When ovulation is no longer advertised with a clarion call, the risk of cuckoldry decreases and becomes more manageable.

These are the set of behaviors that Lovejoy calls an early hominid adaptive suite, which he defines as a set of interrelated characteristics which, together, form a pattern that optimizes a species for enhanced evolutionary success. While some of Lovejoy’s arguments remain controversial, perhaps the most important part of the story, which has major ramifications for our own species, is the shift away from individual competitiveness to increased cooperation within a group. This cooperation was the launching pad for homo sapiens, as we will soon see.

[1] Bonobos, previously known as “pygmy chimpanzees” are one of the two species comprising the genus Pan, along with the “common chimpanzee.”

[2] For a useful table listing characteristically human attributes, see Flinn, M. V., Geary, D. C., and Ward, C. V. (2005). “Ecological dominance, social competition, and coalitionary arms races: Why humans evolved extraordinary intelligence.” Evolution and Human Behavior, 26(1), 10-46.

[3] Tomasello, M. (1999). “The Human Adaptation for Culture.” Annual Review of Anthropology(28), 509-29.

[4] Gibbons, A. (2009). “Breakthrough of the Year: Ardipithecus Ramidus.” Science, 326, 598-599.

[5] White, T. D., Asfaw, B., Beyene, Y., Haile-Selassie, Y., Lovejoy, C. O., Suwa, G., and WoldeGabriel, G. (2009). “Ardipithecus ramidus and the Paleobiology of Early Hominids.” Science, 326, 64-86.

[6] Lovejoy, C. O. (2009). “Reexamining Human Origins in Light of Ardipithecus ramidus.” Science, 326(2 October 2009), 74e1-74e8.

March 2, 2010

So What Really Makes Us Human?

Posted in Language and Myth tagged cognitive history, consciousness, executive function, Hunter-gatherers, language, prefrontal cortex, social intelligence, theory of mind at 2:38 pm by Jeremy

Elephants have trunks; giraffes have necks; anteaters have tongues. What do we humans have that makes us unique? At first blush, it seems that answering that should be pretty easy, since we do so much that no other animal does: we build cities, write books, send rockets into space, create art and play music. But these are all the results of our uniqueness, not the cause. OK, how about language? That seems to be something universal to all human beings, which no other animal possesses.[1] Language definitely is a major element in human uniqueness. But what if we try to go back even further, before language as we know it fully developed? What was it about our early ancestors that caused them to even begin the process that ended in language?

The influential cognitive neuroscientist Merlin Donald has suggested that, beginning as far back as two million years ago, there was a long and crucial period of human development that he calls the “mimetic phase.” Here’s how he describes it:

a layer of cultural interaction that is based entirely on a collective web of conventional, expressive nonverbal actions. Mimetic culture is the murky realm of eye contact, facial expressions, poses, attitude, body language, self-decoration, gesticulation, and tones of voice.[2]

What’s fascinating about the mimetic phase is that we modern humans never left it behind. We’ve added language on top of it, but our mimetic communication is still, in Donald’s words, “the primary dimension that defines our personal identity.” You can get a feeling for the power of mimetic expression when you think of communications we make that are non-verbal: prayer rituals, chanting and cheering in a sports stadium, expressions of contempt or praise, intimacy or hostility. It’s amazing how much we can communicate without using a single word.

So before we talked, we chanted, grunted, cheered and even sang.[3] But that still doesn’t explain how we started doing these things that no other creature had done over billions of years of evolution. Over the past twenty years, a powerful theory, called the Social Brain Hypothesis, has gained increasing acceptance as an explanation for the development of our unique human cognition. This hypothesis states that “intelligence evolved not to solve physical problems, but to process and use social information, such as who is allied with whom and who is related to whom.”[4]

The underlying logic of this approach is that, when hominids first began adapting themselves to a less wooded environment, they didn’t have a lot of physical advantages: they couldn’t compete well with other predators for food, and were pretty vulnerable themselves to hungry carnivores. So, more than ever before, they banded together. As they did so, they faced ever-increasing cognitive demands from being in bigger social groups. And it wasn’t just the size of the group, but the complexity of the lifestyle that increased. If you were going out with your buddies on a long hunting trip, how could you know for sure that nobody else was going to jump into bed with your partner while you were gone?

With dilemmas like this to face, early hominids got involved in “an ‘arms race’ of increasing social skill”, learning to use “manipulative tactics” to their best advantage.[5] But a newly emerging implication of this line of research is that cooperation may have played just as large a part as competition in contributing to our human uniqueness. Neuroscientist Michael Gazzaniga summarizes this viewpoint as follows:

although cognition in general was driven mainly by social competition, the unique aspects of human cognition – the cognitive skills of shared goals, joint attention, joint intentions, and cooperative communication needed to create such things as complex technologies, cultural institutions, and systems of symbols – were driven, even constituted, not by social competition, but social cooperation.[6]

In fact, some prominent anthropologists go farther and suggest that it was “the particular demands” of our unusually “intense forms of pairbonding that was the critical factor that triggered” the evolution of our large brains.[7]